OpenAI released a guide to better search and generate good results from large language models like GPT-4 also known as GPT models.

OpenAI tries to give better results for searchers, so they have released the documentation

Here you can see the tactics that you can use to generate good results via their LLMs and you can also see the prompt engineering guide.

What is Prompt Engineering

Prompt Engineering often refers to skills of crafting better results from large models or computer systems with some set of precise instructions.

Computers can’t read your mind or know what you need but if you write precise instructions then LLMs can give you the desired results.

Let’s say you want Dalle to draw a picture of a cat. If you just say “Draw something”, it will randomly generate anything.

But if you say “Draw a beautiful cat with green eyes with a pink bow standing next to a dog”, then it will give you specific instructions based on your prompts. They are called Prompts.

Let’s look at some other examples:

Normal Prompt: “Translate this sentence.”

Engineered Prompt: “Translate the following English sentence into French: ‘Hello, how are you today?’”

Normal Prompt: “Help me with this code.”

Engineered Prompt: “I’m trying to create a loop in Python to print numbers from 1 to 10. Provide a code snippet and explanation.”

Normal Prompt: “Solve this math problem.”

Engineered Prompt: “Calculate the area of a right-angled triangle with a base of 8 meters and a height of 5 meters.”

Now you know the basic understanding of prompt engineering. Let’s look at the guide of OpenAI to understand more about prompt engineering and this will help you to better interact with ChatGPT and other LLMs.

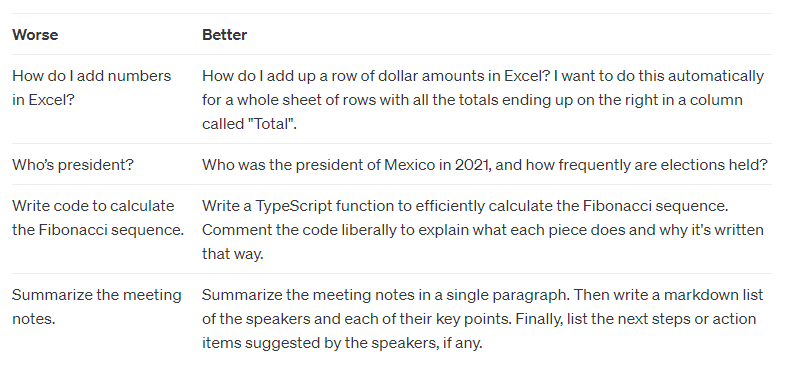

1. Write clear instructions for LLMs

Models can’t read your mind or know what you want. You have to give them a proper set of instructions.

If you don’t like the format, tell them the format you want. If you don’t like the replies or it’s too long, ask for another reply or to make it simpler to understand.

- Include more details in your prompts to generate relevant answers.

- Ask the model to adapt the persona you want.

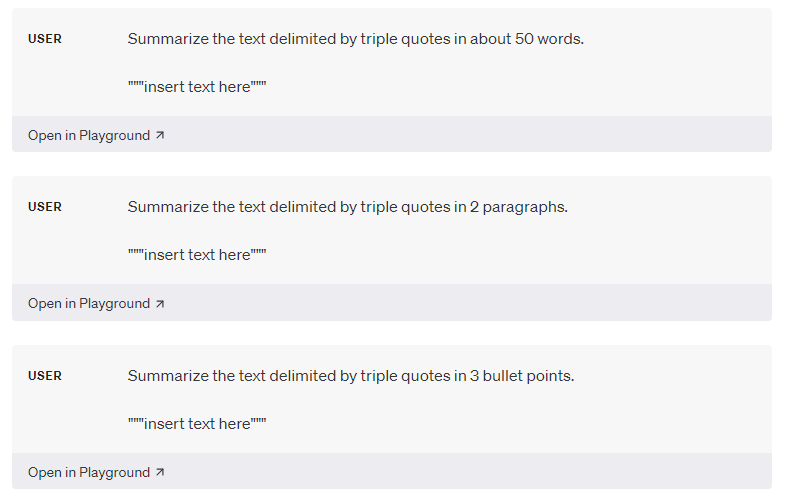

- Use delimiters like quotation marks, and XML tags to demonstrate a prompt to treat differently.

- Specify the steps required to complete a task briefly

- Provide the type of result you want with examples

- Specify the length or any other output to give to the chatbot.

2. Provide some references

Language models can give you fake answers or fabricated citations, URLs, and answers.

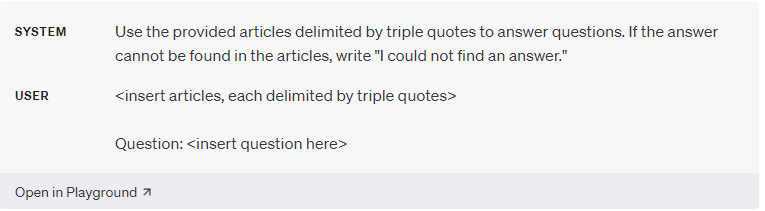

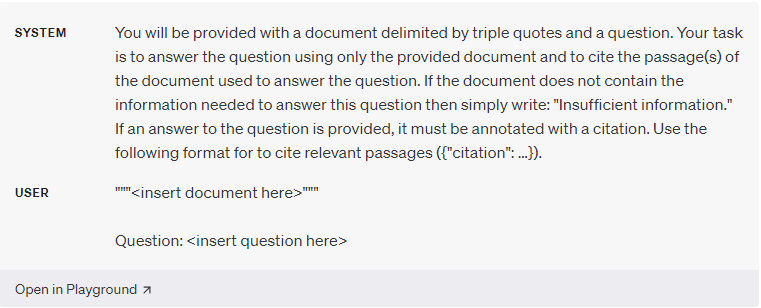

You have to give some reference text to these models so they can help you answer less fabricated or more accurate answers.

But you can’t fully rely on an LLM to give the important questions answer, so fact-check these results and them if it is correct or not.

- Instruct the model to give answers using reference texts.

- Instruct the model to answer with citations from a reference text.

3. Split multiple tasks into simpler sub-tasks

Like, you divide your complex or hard tasks into some simple tasks, the same way you can instruct the large models of the complex tasks into some simpler subtasks so that the chatbot can give you better results.

Complex answers tend to have high error or misinformation so you can give prompts with some simple tasks to give you the result.

- Use intent classification to figure out what the user is asking for in a query.

- For long conversations in chat applications, give a short summary or filter out unnecessary parts of the previous chat.

- Break down and summarize long documents part by part, and then put together a complete summary step by step.

4. Give time to Generate the result

You can’t suddenly multiply some complex math problems, but with time you will be able to solve them.

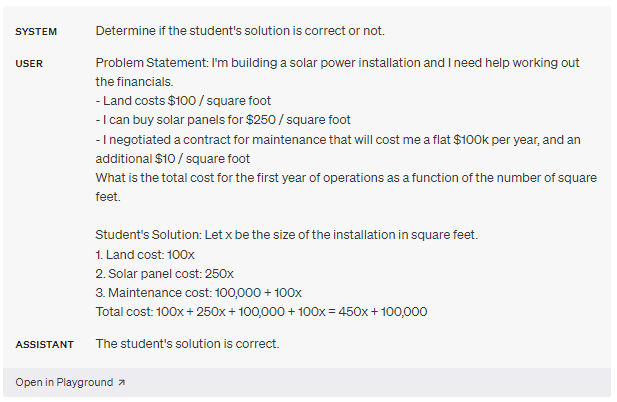

Similarly, LLMs make more reasoning errors when you try to answer fast rather than taking time to work out answers.

- Encourage the model to find its solution first instead of quickly deciding.

- Make the model think through things internally or ask a series of questions to keep its reasoning hidden.

- Check with the model if it overlooked anything in previous attempts.

5. Use Necessary and useful tools

LLMs perform best when you give them plenty of references. You can feed the models from the outputs of other tools.

OpenAI has another tool like OpenAI’s Code Interpreter that can help you do the math and run code.

So, if another tool can perform better and give a much more reliable answer, consider using tools and language models to generate better results.

- Efficiently find information by using embeddings-based search for knowledge retrieval.

- Enhance accuracy by allowing the model to execute code or connect with external APIs for calculations.

- Enable the model to use specific functions for better performance.

Wrapping Up…

In conclusion, mastering the art of prompt engineering is the key to unlocking the full potential of large language models like GPT-4.

OpenAI’s guide tells you the importance of providing precise instructions to these models to get better results.

Remember, computers can’t read minds, but they excel at following well-crafted prompts.

Use these tips and tactics to generate much more specific and satisfying results with ChatGPT or any other chatbots.